Introduction

In modern programming, encoding is crucial for processing text data. One popular encoding standard is the Unicode encoding scheme, which assigns a unique numerical value (code point) to every character across all human scripts. UTF32 is a Unicode encoding that represents each character using a fixed 32-bit length. In this article, we’ll dive into what UTF32 Encode is and how it works.

What is UTF32 Encode?

UTF32 Encode is a character encoding standard that uses 32 bits to represent a Unicode character. The UTF32 encoding scheme can represent every Unicode character’s integer code point using exactly four bytes, providing a fixed-width format. UTF32 encoding has several names, including UCS-4, Universal Character Set 4, ISO-10646-UCS-4, and Unicode Transformation Format, 32 bits.

How does UTF32 Encode work?

UTF32 encoding works by assigning a 32-bit integer (4 bytes) to each Unicode code point. This encoding can represent every character in the Unicode standard, which encompasses over 140,000 characters across various scripts used worldwide. Thus, UTF32 encoding is one of the most comprehensive Unicode encoding standards.

Sample code

Here’s an example of how to encode a string using the UTF32 encoding scheme in Python:

text = "Hello World"

encoded_utf32 = text.encode("utf-32")

print(list(encoded_utf32))The output of this code will be: [255, 254, 0, 0, 72, 0, 0, 0, 101, 0, 0, 0, 108, 0, 0, 0, 108, 0, 0, 0, 111, 0, 0, 0, 32, 0, 0, 0, 87, 0, 0, 0, 111, 0, 0, 0, 114, 0, 0, 0, 108, 0, 0, 0, 100, 0, 0, 0]

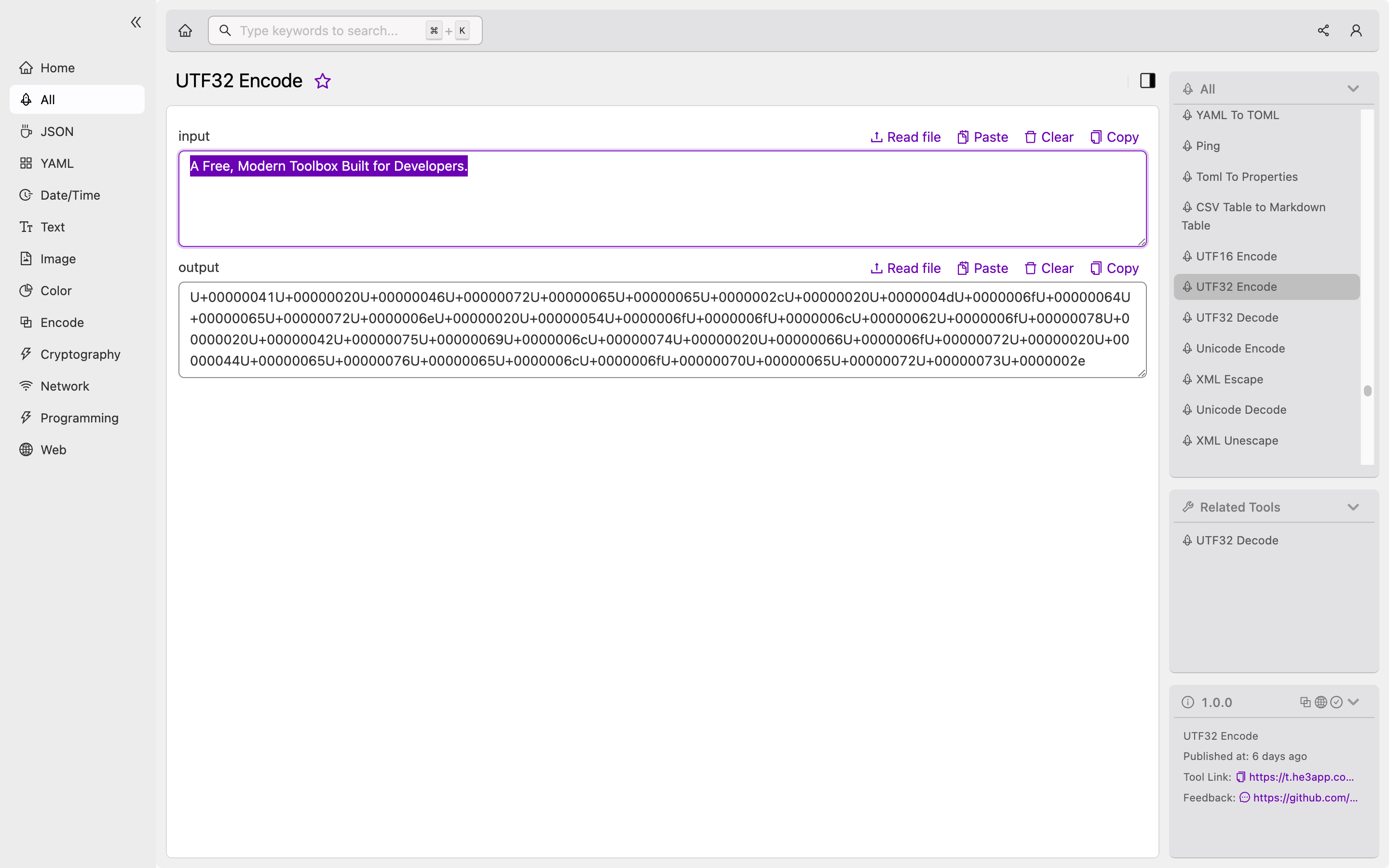

Or you can use UTF32 Encode tool in He3 Toolbox (https://t.he3app.com?g5y0 ) easily.

Key Features of UTF32 Encode

Here are some of the key features of UTF32 Encode:

- 32-bit fixed-width format for each encoded character

- Can represent every character in the Unicode standard

- Supports encoding and decoding of text data in various programming languages

- Often used in situations that require high compatibility across different scripts

Scenarios of UTF32 Encode for Developers

Developers can use UTF32 encoding in various scenarios, including:

- Storing and processing text data in databases and applications that require high compatibility across different scripts

- Creating web applications that need to display text data in multiple scripts from different regions worldwide

- Developing software that processes machine-readable text data, such as XML or JSON, which may include characters from various language scripts

Misconceptions about UTF32 Encode

Here are some common misconceptions about UTF32 Encode:

Myth 1: UTF32 Encoding consumes too much memory

UTF32 encoding uses four bytes for every character, which can lead to higher memory usage compared to other Unicode encoding standards. However, its fixed-width format can provide better performance in some situations where random access to string elements is necessary.

Myth 2: UTF32 Encoding is the best Unicode encoding standard

While UTF32 encoding can represent every Unicode code point, other encoding standards offer more compact representations of text data. For instance, UTF-8 encoding represents every character using variable-length byte sequences, which can save storage space.

Frequently Asked Questions (FAQs)

Here are some commonly asked questions about UTF32 Encode:

Q1. Which programming languages support UTF32 encoding?

Many programming languages support UTF32 encoding, including Python, Java, C++, and C#. Developers can encode or decode UTF32 text data using built-in functions or third-party libraries.

Q2. Can I convert UTF32 encoded text data to other Unicode encoding standards?

Yes, developers can convert UTF32 encoded text data to other Unicode encoding standards, such as UTF-8 or UTF-16, using various encoding libraries or built-in functions available in programming languages.

Conclusion

UTF32 Encode is a popular Unicode encoding standard that provides fixed-width format for representing characters using 32 bits. This encoding scheme supports every Unicode code point and is often used in situations where high compatibility across different scripts is necessary. Developers can use this standard in various programming languages, including Python, Java, C++, and C#, to store and process text data.

References: