Introduction

UTF16 (16-bit Unicode Transformation Format) is a character encoding used in computing that can represent all characters identified in the Unicode standard using 16 bits per character. While UTF8 is a popular character encoding on the web, UTF16 is commonly used in programming and data storage. UTF16 Decode is a process of taking a string of UTF16-encoded characters and converting them into their respective human-readable characters. In this article, we will dive into how UTF16 Decode works and provide scenarios for developers to implement it.

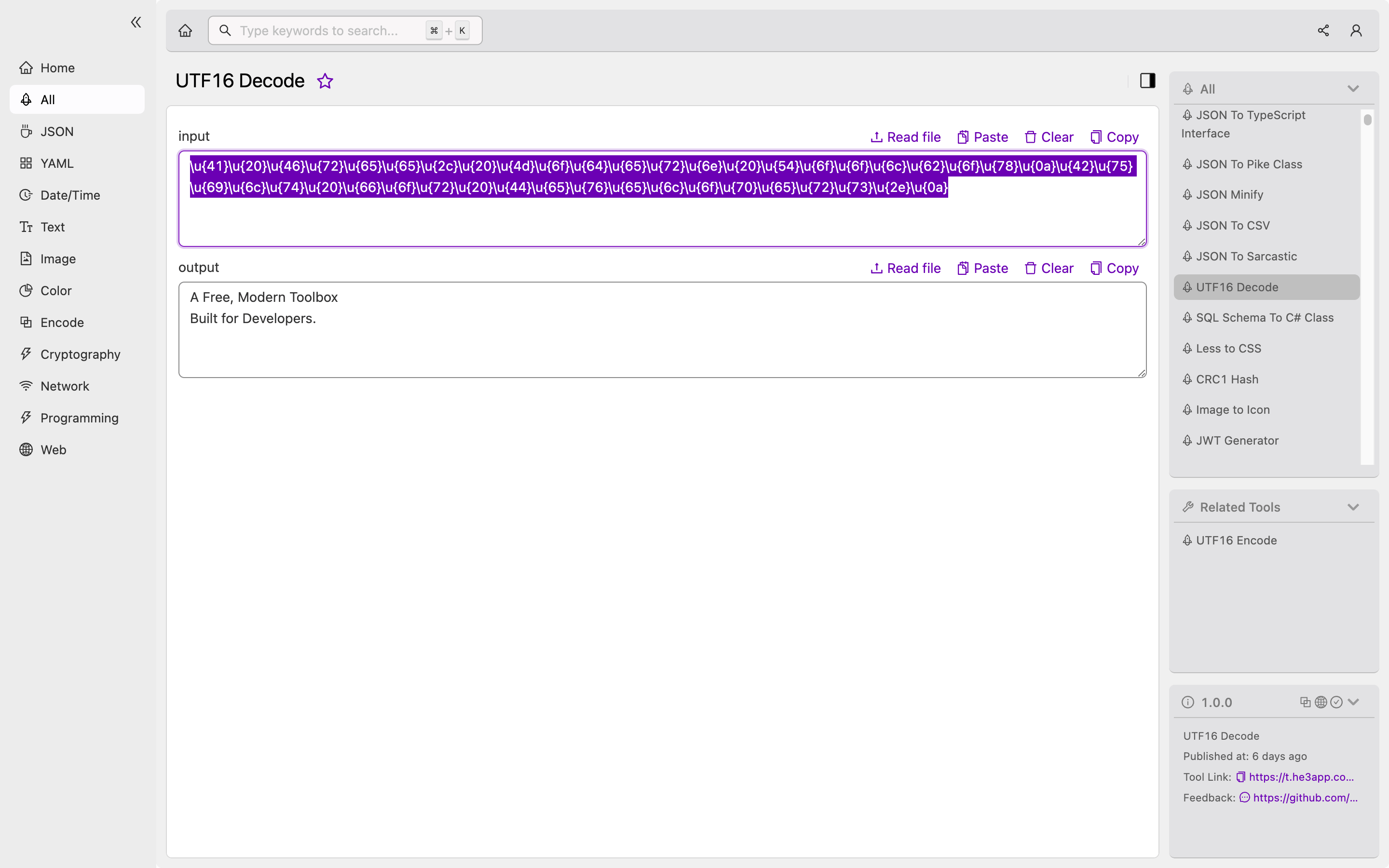

How to Use a UTF16 Decode Tool

UTF16 Decode can be implemented in various programming languages, including Java, Python, JavaScript, and C++. Or you can use UTF16 Decode tool in He3 Toolbox (https://t.he3app.com?u4l8 ) easily. Here is how it works in Python:

encoded_string = b'\xff\xfeT\x00h\x00i\x00s\x00 \x00i\x00s\x00 \x00a\x00 \x74\x00e\x00s\x00t\x00 \x00s\x00t\x00r\x00i\x00n\x00g\x00\x00\x00'

decoded_string = encoded_string.decode('utf-16')

print(decoded_string)This code will output the following human-readable string: “This is a test string.” The first two bytes b'\xff\xfe' are a Byte Order Mark (BOM) that indicate the byte order of the UTF16 encoding. Any text editor that supports UTF16 encoding will recognize and remove the BOM automatically.

Key Features

| Feature | Description |

|---|---|

| Unicode Support | UTF16 Decode can handle all characters identified in the Unicode standard. |

| Byte Order Mark | UTF16 Decode can detect and remove the Byte Order Mark (BOM) from the encoded string. |

| Multilingual Support | UTF16 Decode is capable of decoding multilingual encoded strings. |

| High-Quality Output | UTF16 Decode produces high-quality human-readable outputs. |

Scenarios for Developers

UTF16 Decode is useful in several scenarios for developers. One common use case is for handling text data in databases or data storage systems that use UTF16 encoding. It is also helpful for processing text data in programming languages where UTF16 is the default encoding, such as Java. Additionally, developers may encounter UTF16-encoded strings when interacting with non-ASCII character sets.

Misconceptions and FAQs

Misconception: UTF16 is Always Big Endian

While the default byte order of UTF16 is big-endian, it is not always the case. Sometimes, little-endian UTF16 encoding is used, which means that the least significant byte is stored first. UTF16 Decode can handle both big-endian and little-endian byte orders.

FAQ #1: Can UTF16 Decode Convert UTF8 Strings to Human-Readable Strings?

No, UTF8 and UTF16 are different character encodings. UTF16 Decode can only take UTF16-encoded strings and decode them into human-readable strings. To decode UTF8-encoded strings, you would need to use a UTF8 decoder.

FAQ #2: Is UTF16 Limited to 16-bit Characters?

No, UTF16 is capable of encoding characters with code points beyond the 16-bit range. To represent such characters, UTF16 uses a combination of two 16-bit code units called a surrogate pair.

Conclusion

UTF16 Decode is a crucial tool for handling and processing UTF16-encoded strings. It can be implemented in various programming languages or easily used through a UTF16 Decode tool such as He3 Toolbox (https://t.he3app.com?u4l8 ). Developers working with multilingual text data or data storage systems that use UTF16 encoding should consider UTF16 Decode to ensure accurate and high-quality outputs.

References: