Have you ever noticed duplicated data in your dataset, especially text data? Text Deduplication, also known as Duplicate Detection, is a natural language processing technique that helps identify and eliminate repeated data points. This technique can be implemented in various fields such as data cleaning, search engines, e-commerce, social media analysis, and machine learning. In this article, we will explore the concept of Text Deduplication, how it works, its key features, and scenarios for developers to implement in their projects.

How Text Deduplication Works

Text Deduplication is a multistep process of identifying and removing (or merging) duplicated text data points. The technique relies on various algorithms and models such as Levenshtein distance, Jaccard similarity, cosine similarity, and deep learning.

The first step in Text Deduplication is to preprocess the text data, which usually involves tokenizing, stemming, removing stop words, and normalizing text data. Then, the technique relies on similarity measures such as Levenshtein distance and Jaccard similarity to compare the text data points and identify potential duplicates. For instance, the Levenshtein distance measures the minimum edit distance between two strings by counting the number of operations required to transform one string into the other, while the Jaccard similarity computes the similarity of two sets by dividing the size of their intersection by the size of their union.

Once potential duplicates are identified, the technique can employ more complex algorithms such as cosine similarity and deep learning models to distinguish between true duplicates and similar but distinct data points. Cosine similarity measures the cosine of the angle between two vectors, which represents the magnitude and direction of their similarity, while deep learning models such as Siamese networks can learn to encode and compare text data points to detect similarities and differences.

Code Snippet

Here is a code snippet illustrating how to implement Text Deduplication using Python’s fuzzywuzzy library:

from fuzzywuzzy import fuzz

def find_duplicates(data):

duplicates = {}

for i, d1 in enumerate(data):

for j, d2 in enumerate(data[i+1:]):

sim_score = fuzz.token_set_ratio(d1, d2)

if sim_score > 90:

if i not in duplicates:

duplicates[i] = []

if i+j+1 not in duplicates:

duplicates[i+j+1] = []

duplicates[i].append(i+j+1)

duplicates[i+j+1].append(i)

return duplicatesThis code snippet uses the fuzzywuzzy library to compute the token set ratio between two text data points and identify duplicates if their similarity score is above 90.

Scenarios for Developers

Text Deduplication can be used in various scenarios for developers. Here are a few examples:

- Data cleaning: Text Deduplication can help clean up duplicated text data points in datasets and improve the quality of the data.

- Search engines: Text Deduplication can help search engines eliminate duplicate search results and provide more relevant and diverse results to users.

- E-commerce: Text Deduplication can help e-commerce platforms detect and eliminate duplicated product descriptions, reviews, and user-generated content to provide a better shopping experience to customers.

- Social media analysis: Text Deduplication can help social media platforms detect and eliminate duplicated posts, comments, and messages to reduce clutter and improve engagement.

- Machine learning: Text Deduplication can help improve the performance and efficiency of machine learning models by eliminating duplicate or redundant data points.

Key Features

Here are some key features of Text Deduplication techniques:

| Feature | Description |

|---|---|

| Preprocessing | Text Deduplication techniques require preprocessing text data for better comparison and analysis. |

| Similarity measures | Text Deduplication techniques use various similarity measures such as Levenshtein distance, Jaccard similarity, and Cosine similarity to identify duplicate text data points. |

| Algorithms | Text Deduplication techniques use various algorithms such as clustering, fingerprinting, and deep learning models to distinguish between true duplicates and similar but distinct data points. |

| Performance | Text Deduplication techniques can scale to large datasets and handle various types and formats of text data. |

| Accuracy | Text Deduplication techniques provide high accuracy and performance compared to manual or rule-based approaches. |

Misconceptions and FAQs

Misconception: Text Deduplication is an exact method.

Text Deduplication relies on similarity measures and algorithms that provide a degree of confidence in identifying duplicate data points rather than an exact solution. False negatives and false positives are possible, and the threshold for similarity scores may need to be adjusted based on the context and the type of data.

FAQ 1: How can I improve the accuracy of Text Deduplication?

You can improve the accuracy of Text Deduplication by using multiple similarity measures and algorithms, adjusting the similarity threshold, and incorporating domain-specific knowledge or rules. Machine learning models such as Siamese networks can also improve the accuracy of Text Deduplication by learning from examples and feedback.

FAQ 2: Is Text Deduplication computationally expensive?

Text Deduplication can be computationally expensive, especially for large datasets or complex algorithms. However, there are various optimization techniques such as indexing, hashing, and parallel processing that can improve the performance and scalability of Text Deduplication techniques.

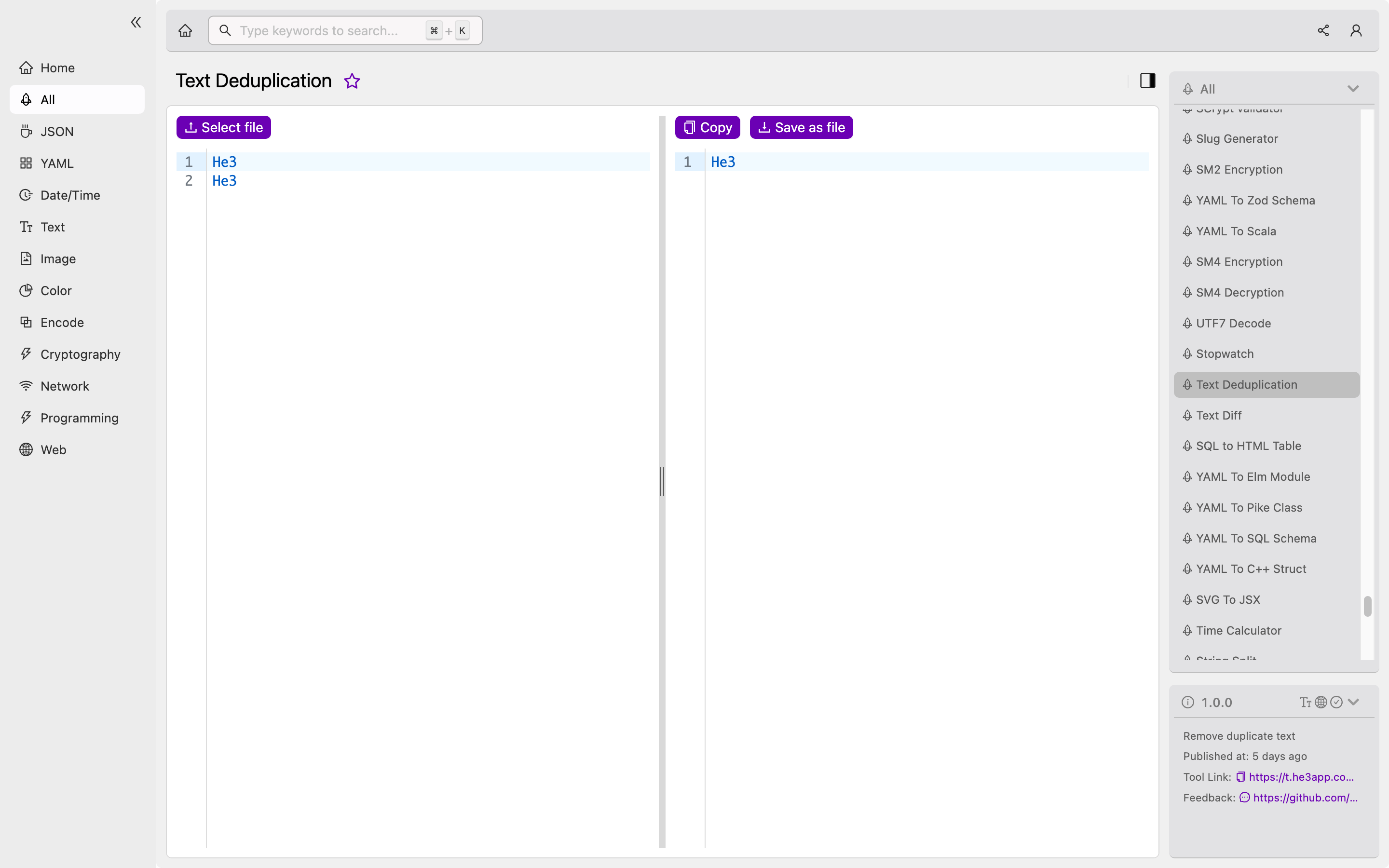

How to Use Text Deduplication

Now that you know what Text Deduplication is and how it works, you can start using it in your projects. There are various libraries and tools that provide Text Deduplication functionalities such as Python’s fuzzywuzzy, dedupe.io, and He3 Toolbox’s Text Deduplication tool. Or you can use Text Deduplication tool in He3 Toolbox (https://t.he3app.com?t280 ) easily.

In conclusion, Text Deduplication is a powerful technique that can help identify and eliminate duplicated text data points in various scenarios. Developers can use various similarity measures, algorithms, and tools to implement Text Deduplication in their projects and improve the quality and efficiency of their data. For more information, you can check out Wikipedia’s article on Duplicate Detection or He3 Toolbox’s Text Deduplication tool.